Presentation

Since my previous blog entry where I had only the key frames of the one character I have now fully animated all four characters, each having their own unique animation ready for submission and presentation.

For the presentation David and I decided to record ourselves using my laptop camera and software called Camtasia. We wrote a script as to make sure we kept within the time frame of a maximum 10 minutes for our presentation, and recorded each part of the script we had in separate video files so that I could later place them with slides and video when compositing.

The script we had initially made was too long in its first draft. After recording it the time came to a total of approximately 16 minutes long. So we made cuts and condensed the script into the most informative and direct addressing of important points that we could. This happened 3 times and finally produced a set of recordings that lasted just shy of 10 minutes.

I took the camera recordings, the cinematic video and the slides David and I had produced to correspond with our recordings and placed them in After Effects. I chose to use After Effects for the compositing as it is a very powerful and flexible tool for video production that had all the formats and codecs we needed, and I have had previous experience with it.

The Cinematic:

For submission purposes the cinematic will be at the resolution of 1050 x 576. For this blog purpose it is in 1920 x 1080 as to show as much detail as possible. This version also has music for theatrical affect, but was left out from the submission version as to keep what is shown to only that which David and I have created.

The Presentation:

Critical Evaluation:

If we take a look at the schedule on the blog entry for the 5th of February you can see that for the most part I've managed to stay on track with the micromanaging tasks and deadlines I made for myself, as well as the important milestone deadlines. However in the last few weeks of this project I found myself struggling to keep up with the deadline for the final animations, ready for rendering and presenting. I can only attribute this to the unforeseen circumstance of the rig not working as intended in regards to the master controller, which I have detailed in earlier blog entries; animating 4 unique characters (not in appearance) without the use of a master controller took a lot longer than I had hoped to spend on the animations, which impacted the quality of them also. I believe them to be good, but had I not had the issue with the rig I know I could have produced something better. That being said I am happy with the final cinematic.

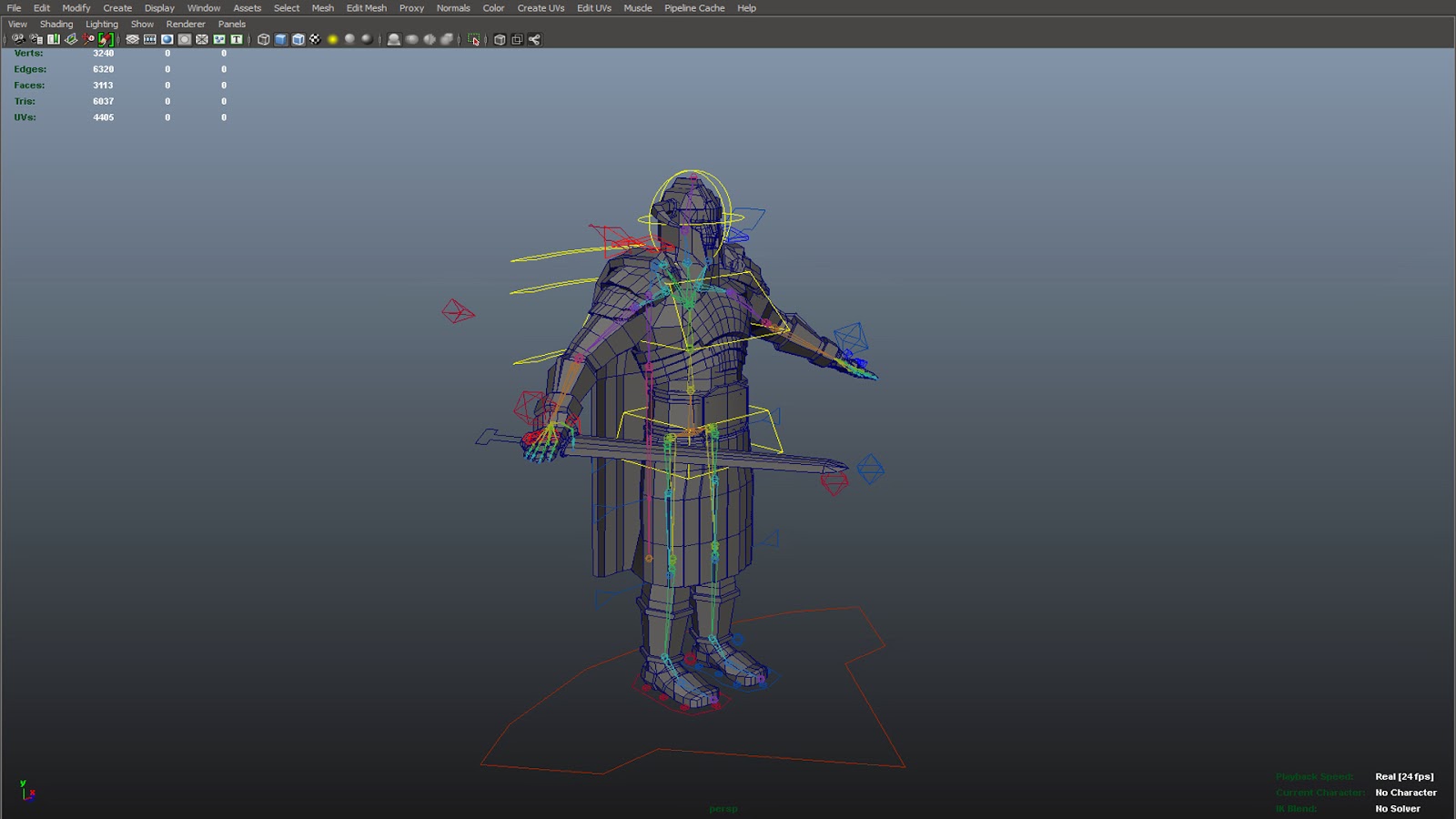

I found the work I have done to be very informative for future ventures into character modelling should I decide to try again. Learning this process has allowed me to understand the technical constraints on character modelling and rigging. My character model was very low polygon as I thought this was best to help emphasise the stone structure that it would be shown to be made from. However I have come to realise that regardless of the polygon count, more polygons should always be spent in the topology of the character that allows for deformation when animating. For example, if I were to make another character or redo this one then I'd make sure to spend a few more polygons around the shoulder and armpit, and to direct the edge flows as to allow them to stretch and compress in a manner that wouldn't negatively affect the structure of the silhouette of the character or the textures on it. I did research into this, where in an earlier entry you can see the .gif image of the shoulders rotating on a modelled torso, but evidently did not implement this as well as I should have.

To improve on what I have done for the cinematic I think reducing the characters to a total of 3, and giving them more polished animation would be one of the best improvements. I did not have enough time to implement the principles of animation to a standard I wanted, and I think reducing the character count would allow me to focus time on them to do this. This would also suggest finding a better way to estimate and manage my time and deadlines.

I would also think about creating an emissive texture for them, so that when they come to life in the cinematic their eyes and cracks on their body would glow or pulsate a cold blue colour, as it was suggested that David could do this on the runes found around the environment, I think doing this would complement that.

I would also consider creating a new rig, simplified for cinematic use, as the current rig is targeted for game purposes where the engine controls the world translation of the character and not the rig.

To improve on what I have done for the cinematic I think reducing the characters to a total of 3, and giving them more polished animation would be one of the best improvements. I did not have enough time to implement the principles of animation to a standard I wanted, and I think reducing the character count would allow me to focus time on them to do this. This would also suggest finding a better way to estimate and manage my time and deadlines.

I would also think about creating an emissive texture for them, so that when they come to life in the cinematic their eyes and cracks on their body would glow or pulsate a cold blue colour, as it was suggested that David could do this on the runes found around the environment, I think doing this would complement that.

I would also consider creating a new rig, simplified for cinematic use, as the current rig is targeted for game purposes where the engine controls the world translation of the character and not the rig.

As an overall evaluation of the project I would say David and I worked well together and gave feedback in a constructive manner that helped bring the quality of our work to higher standards. The cinematic reads well and I would say that the audience can understand what is happening in the scene, as well as understanding the scene itself. So in regards to the task of bringing the initial environment concept art to real-time 3D realisation, I would say we have accomplished that.